When something goes wrong in your system, you don't just need data, you need the right data connected in the right way. The correlation layer is what transforms separate streams of metrics, logs, and traces into a coherent investigation experience. It's the difference between spending hours hunting for clues and quickly identifying the root cause.

The investigation flow

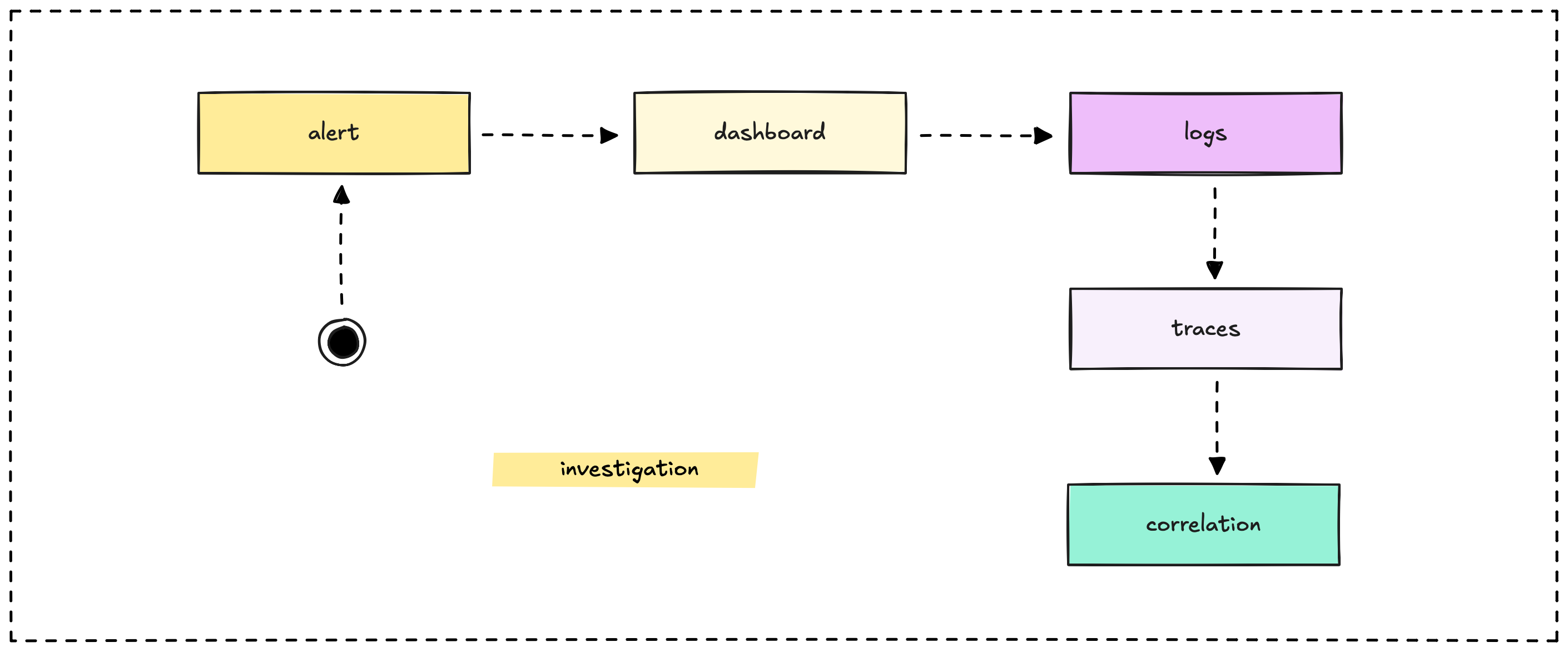

Here's how these pieces connect in a real implementation:

When something goes wrong, your monitoring system should guide you through a logical investigation process. You start with high-level metrics that show the problem exists, then drill down through logs and traces to find the root cause.

The investigation flow:

- Alerts tell you something is wrong

- Dashboards show you the scope and impact

- Logs provide details about what happened

- Traces show you where the problem occurred

- Correlation connects all this data so you can fix the issue

Each component serves a specific purpose in this investigation flow. When they work together smoothly, debugging becomes systematic rather than frantic.

Starting with alerts

A good alert doesn't just tell you something is broken, it gives you enough context to start investigating effectively. The alert should include:

- What service or component is affected

- How severe the issue appears to be

- When the problem started

- A link to relevant dashboards

Example alert: "API response time >5s for 3 minutes. Dashboard: https://grafana.example.com/api-performance"

Moving to dashboards

Your dashboard should immediately show you the bigger picture. When you follow the alert link, you should see:

- Is this affecting all users or just some?

- Is the problem getting worse or staying stable?

- Are other related metrics showing issues?

- When exactly did this pattern start?

The dashboard helps you understand scope and urgency before diving into details.

Drilling into logs

Once you understand the scope, logs help you find specific examples of the problem. But only if those logs are connected to your metrics through shared identifiers.

Good correlation: Your dashboard shows high error rates at 14:32. You search logs for errors around that time and immediately find relevant error messages because they include timestamps and request IDs.

Poor correlation: You see high error rates but can't easily find the corresponding log entries because timestamps don't match or there's no shared context.

Following traces

For complex issues that span multiple services, traces show you exactly where time is being spent or where errors occur in the request flow.

The key insight: Traces become powerful when you can jump from a log entry (showing what failed) directly to the trace (showing why it failed and where).

Making correlation work in practice

Correlation isn't automatic, it requires planning and consistent implementation across your monitoring stack.

Shared identifiers are everything

The foundation of good correlation is consistent use of shared identifiers across all your telemetry:

Request IDs appear in metrics, logs, and traces for the same user request. When you see a problem in one place, you can immediately find related data in other systems.

User IDs help track issues that affect specific users across different parts of your system.

Session IDs group related activities together, especially useful for debugging user experience issues.

Transaction IDs connect business processes that might span multiple technical operations.

Structured logging makes correlation possible

Free-form log messages are hard to correlate with other data. Structured logs with consistent fields make correlation automatic:

{

"timestamp": "2025-05-14T14:32:15Z",

"level": "ERROR",

"request_id": "req_abc123",

"user_id": "user_456",

"service": "api-gateway",

"message": "Database timeout",

"duration_ms": 5000

}

With structured logs, you can easily jump from a metrics anomaly to the specific log entries that caused it.

Time synchronization matters

If your metrics show a spike at 14:32 but your logs are timestamped 14:31 because of clock drift, correlation becomes much harder. Ensure all your systems have synchronized clocks.

Use UTC everywhere. Time zones and daylight saving time make correlation unnecessarily complicated.

Context propagation through traces

Distributed traces automatically propagate context through your system, making correlation easier. When service A calls service B, the trace context flows along with the request.

This means a single trace ID can connect:

- The original user request

- Database queries triggered by that request

- API calls to external services

- Background jobs spawned by the request

Step-by-step implementation guide

Here's how to implement effective correlation in your monitoring system:

Step 1: Add correlation identifiers to all telemetry

Every piece of telemetry should include shared identifiers that link related data together.

For synthetic tests:

const { chromium } = require('playwright');

const requestId = `req${Math.random().toString(36).slice(2)}`;

const traceId = `trace${Math.random().toString(36).slice(2)}`;

(async () => {

const browser = await chromium.launch();

const page = await browser.newPage();

const startTime = Date.now();

await page.goto('https://yourblog.github.io/post/1');

const loadTime = Date.now() - startTime;

// Log with correlation IDs

console.log(`${new Date().toISOString()} [INFO] Page loaded in ${loadTime/1000}s, request_id=${requestId}, trace_id=${traceId}`);

// Push metric to Prometheus with labels

// page_load_time{request_id="req456", trace_id="trace123"} 5.0

await browser.close();

})();

For application code: Use OpenTelemetry to automatically generate and propagate trace IDs across your system. This ensures consistent identifiers without manual work.

Step 2: Store telemetry in appropriate systems

Different types of data need different storage systems, but they should all include the same correlation identifiers:

Metrics in Prometheus:

page_load_time{request_id="req456", endpoint="/post/1"} 5.0

arweave_fetch_duration{request_id="req456", trace_id="abc123"} 4.2

Logs in Loki or Elasticsearch:

2025-05-14T19:41:00Z [ERROR] Arweave fetch failed, request_id=req456, trace_id=abc123, error="timeout after 5s"

Traces in Jaeger: A trace with trace_id=abc123 showing spans for the HTTP request, database query, and Arweave API call.

Step 3: Set up dashboards with drill-down capabilities

Create Grafana dashboards that make correlation easy:

Main dashboard panels:

- Time-series chart showing page_load_time over time

- Table showing recent error logs with request IDs

- Links to trace search filtered by time period

Drill-down workflow:

- See a spike in response time on the main chart

- Click to filter logs by the same time period

- Find specific request IDs from error logs

- Click to view the corresponding trace in Jaeger

Grafana configuration tips:

- Use variables to pass request_id and trace_id between panels

- Configure data source links to automatically open Jaeger with the right trace ID

- Add annotations to overlay deployment events on metric charts

Step 4: Define your debugging workflow

Establish a standard process for your team to follow when investigating issues:

The correlation debugging workflow:

- Start with metrics: Identify an anomaly in Grafana (page load time spikes to 5 seconds)

- Drill down to logs: Use the request ID to query Loki for related logs ("Arweave fetch failed")

- Inspect traces: Use the trace ID to view the request flow in Jaeger (Arweave API call took 4 seconds and timed out)

- Resolve the issue: Use combined insights to fix the problem (add retry logic with shorter timeout)

Document this workflow in a runbook so everyone on your team knows how to investigate issues systematically.

Step 5: Automate correlation where possible

Use tools and features that make correlation automatic:

Grafana Explore: View metrics, logs, and traces in a single interface, automatically linking them via shared identifiers.

Grafana annotations: Overlay log events (like errors) directly on metric charts, making correlation visual.

Alert context: Include relevant dashboard links and correlation IDs in alert messages so responders can immediately jump to the right data.

Step 6: Test your correlation setup

Validate that correlation works by simulating failures:

Test scenario: Temporarily break your Arweave integration and verify that:

- The arweave_fetch_success metric drops to 0 in Grafana

- Error logs appear in Loki with the correct request ID

- Traces in Jaeger show the failed API call with matching trace ID

- You can easily navigate from metric → log → trace using the correlation IDs

Correlation strategies that work

Based on our experience, here are practical approaches to building effective correlation:

Start with request IDs

Even if you don't implement full distributed tracing immediately, adding request IDs to your logs and metrics creates correlation opportunities. Generate a unique ID for each request and include it in all related log entries.

Add correlation gradually

You don't need perfect correlation from day one. Start with your most critical services and gradually add correlation capabilities as you expand your monitoring.

Progressive correlation:

- Add request IDs to logs and alerts

- Include request IDs in custom metrics

- Implement distributed tracing for complex workflows

- Connect business events to technical metrics

Use your monitoring tools' correlation features

Most modern monitoring platforms provide built-in correlation features:

- Grafana can link between different data sources

- Many APM tools automatically correlate metrics, logs, and traces

- Log aggregation systems often provide integration with metrics platforms

Don't reinvent correlation: use what your tools provide.

Design dashboards for investigation

Your dashboards should guide users through the investigation flow. A good incident investigation dashboard might include:

- High-level service health metrics

- Error rate trends with drill-down links

- Recent error logs with request IDs

- Links to trace search filtered for the time period

Why logs work best as a correlation layer

You might wonder: why not just use logs for everything? Here's why the hybrid approach works better:

Metrics for dashboards: Prometheus is optimized for aggregation and visualization. It can quickly answer questions like "What's the average response time over the last hour?" This makes dashboards fast and responsive.

Logs for context: Logs provide rich context that metrics can't capture. When you see a spike in error rates, logs tell you exactly what went wrong and for which specific requests.

Traces for flow: Traces show you how requests move through your system, helping you identify bottlenecks in complex workflows.

The best of all worlds: By using metrics for dashboards and logs/traces for correlation, you get fast visualizations and detailed debugging capabilities.

Common correlation anti-patterns

We've seen several patterns that make correlation harder rather than easier:

Inconsistent identifier formats

Using request_id in logs but requestId in traces makes automatic correlation impossible. Establish conventions and stick to them across all systems.

Missing context in alerts

Alerts that just say "error rate high" without including relevant dashboard links or context force responders to start their investigation from scratch every time.

Tool silos

Having metrics in one tool, logs in another, and traces in a third without any connection between them creates investigation friction. Plan for integration from the beginning.

Over-correlation

Including too many identifiers in every log message creates noise without adding value. Focus on the identifiers you actually use for correlation.

Measuring correlation effectiveness

Good correlation makes debugging faster and more systematic. You can measure this by tracking:

- Time from alert to root cause identification

- Number of tools or dashboards needed during investigation

- Team feedback on debugging experience

The goal: When something goes wrong, your team should be able to quickly understand what happened, why it happened, and how to fix it.

Correlation isn't just a technical capability, it's what transforms monitoring data into actionable intelligence. When done well, it makes the difference between reactive firefighting and proactive system management.