In this month's market report, we explore the evolving landscape of artificial intelligence, API techniques, toolings, programming languages, and the intersection of design and engineering. Our insights shed light on the dynamic shifts and emerging trends across these domains, highlighting the advancements that are shaping the future of technology and software development.

Subtle advances in app design for LLMs pave the way for advance assistants

Apps like Devin AI, Devika, GPT-Pilot (also backed by YCombinator), and many others are a signal of a more robust AI scene where platforms are now powerful and fast enough to host complex agents. With now many ways to design an app for LLMs, the platforms that surround them are given better peformance, lower latencies, and more robust logic to maintain coherency that allows the designs of multi-agent applications. Libraries like Langgraph and Autogen are some of the toolings that have made great steps into complex and stateful AI application design. In addition, there is a ton of research in different styles of application design that we might see grow in the upcoming months, such as LLMCompiler, Plan-and-Execute, Reasoning without Observation and AI feedback loops.

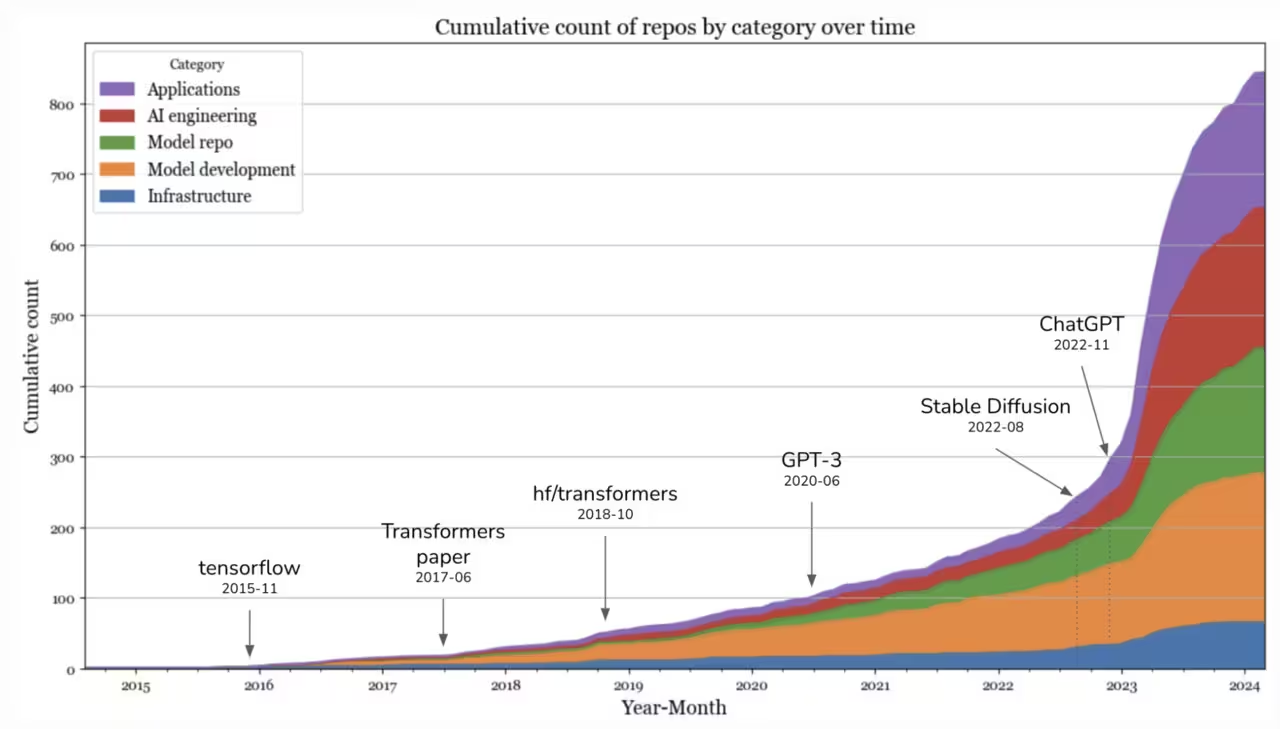

Open source AI repositories: A surge in innovation

Huyen Chip's comprehensive analysis of over 845 open-source repositories, each with more than 500 stars, reveals significant insights into the AI tools domain. Following the introduction of transformative technologies like Stable Diffusion and ChatGPT in 2023, there was a noticeable explosion in the creation of new AI tools. This surge began to stabilize by September 2023, indicating a shift in the competitive landscape of generative AI and a more grounded approach to AI development.

Focused evolution of AI infrastructure

In the realm of AI infrastructure, categories such as computing management (e.g., skypilot), serving (e.g., vllm), and monitoring (e.g., uptrain) have seen steady growth. The explosion of interest in model development is particularly evident in areas related to inference optimization, evaluation, and parameter-efficient finetuning, highlighting a concentrated effort to refine AI operational efficiency.

The rise of individual-led application development

Application development remains a highly active domain, predominantly driven by individual developers. This segment covers a diverse range of applications, including coding tools, bots, and information aggregation solutions. Interestingly, tools centered around prompt engineering, AI interfaces, agents, and AI engineering frameworks have gained popularity. Applications initiated by individuals tend to attract more attention than those launched by organizations, suggesting the potential for valuable one-person companies in the AI space.

Dominance of tech giants in AI contributions

An analysis of GitHub accounts reveals that 19 out of the top 20 AI contributors are from leading technology companies like Google, OpenAI, and Microsoft, with a significant presence of Chinese developers. This dominance underscores the significant resources and influence these entities have in shaping the AI ecosystem.

Rapid development and visibility of AI projects

The AI domain has witnessed projects that quickly capture significant attention, only to see interest wane over time. However, the pace at which developers are releasing new AI tools demonstrates an impressive ability to innovate rapidly.

API techniques and tooling

Advancements in model training and compression

- Retrieval augmented fine tuning (RAFT): This technique aims to enhance Large Language Models' (LLMs) performance by "pre-studying" relevant documents, improving their Retrieval-Augmented Generation (RAG) capabilities.

- Long context models: The view towards using long-context models with extensive custom prompts could potentially eliminate the need for traditional fine-tuning, marking a significant evolution in handling new knowledge within LLMs.

- Model compression: The focus on model compression reflects the necessity to manage latency and costs effectively. The industry has moved from 16-bit to 2-bit, and recent research on 1-bit quantization, highlighting the ongoing efforts to optimize model efficiency.

Scalable vector database for enhanced LLM applications

The scalable vector database emerges as a crucial solution to challenges associated with long-term memory and long-range context dependency in LLM applications. The demand for high-performance vector databases is on the rise, underscoring the importance of advanced indexing algorithms for efficient data retrieval.

AI startups breaking the VC barrier

There are a lot of growing startups that are making the scene with more compelling apps. Apps like Patchwork, PointOne backed by YCombinator - and growing apps on ProductHunt like Saner.AI, Vapi. Domains like Law, Medicine, Support Calling have their own set of challenges and although there isn't really any new technology in the AI space, disciplines in organizing and transforming data for use with AI has brought nuance and complexity to these apps that make them differentiate with other software.

Other notable trends

- Rust adoption by Google and Microsoft: While Google advocates for Rust to address memory safety vulnerabilities, Microsoft offers resources for .NET developers to learn Rust.

- Emergence of design engineers: The role of design engineers, who bridge the gap between design and engineering, is becoming increasingly prominent. They are poised to lead in areas such as product architecture, design infrastructure, and R&D.

- TailwindCSS v4 alpha release: The new version, rebuilt with Rust and integrated with the Lightning CSS parser, promises a significant performance boost, making it ten times faster than its predecessor.

References

- RAFT: A new way to teach LLMs to be better at RAG

- What I learned from looking at 900 most popular open source AI tools

- RAFT: Adapting language model to domain specific RAG

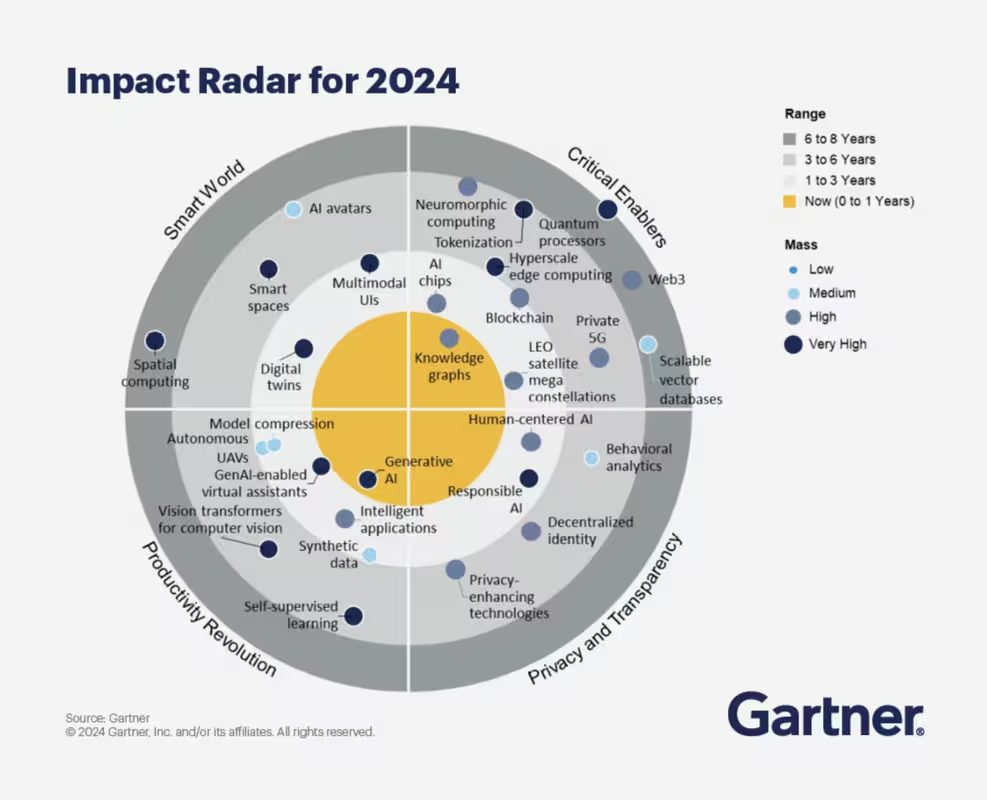

- 30 emerging technologies that will guide your business decisions

- Devin, the first AI software engineer

- Long context models with massive custom prompts

- RAFT - Retrieval augmented fine tuning

- The era of 1-bit LLMs: All large language models are in 1.58 bits

- Secure by design: Google’s perspective on memory safety

- Rust for C#/.NET developers

- Design engineer

- Design engineer